I've been researching how open source technology spent the last 20 years giving the middle finger to corporate control. The argument writes itself. Proprietary software and walled gardens make people dumber, more dependent, and less creative because you never learn how anything works under the hood. Open source is the technical rebellion that corporations hate.

Here's the thing. Researching this properly means digging through 20+ years of mailing lists, GitHub repos, blog posts, corporate PR disasters, and obscure documentation. That's hundreds of hours if you do it manually. LLMs can compress that timeline without sacrificing quality.

I'm not talking about asking ChatGPT to write your argument. That's lazy and produces generic garbage. I'm talking about using LLMs as research assistants that can process information faster than you can read.

***Stop Calling Them AI

Quick clarification. Everyone calls these tools "AI" because that's the marketing term that gets clicks and funding. They're not artificial intelligence. They're Large Language Models, LLMs. Pattern-matching systems trained on massive text datasets.

Real AI would understand context, reason about problems, and learn new concepts without retraining. LLMs predict the next word based on statistical patterns. That's it. No understanding, no reasoning, no consciousness. Just math and probability.

The "AI" label is corporate bullshit designed to make these tools sound more impressive than they are. Same playbook as calling everything "cloud" when it's just someone else's computer. Marketing teams love vague terms that sound futuristic.

This matters because people treat LLMs like oracles instead of glorified autocomplete. They're tools. Useful ones, but tools. Not magic, not sentient, not intelligent.

How LLMs Help

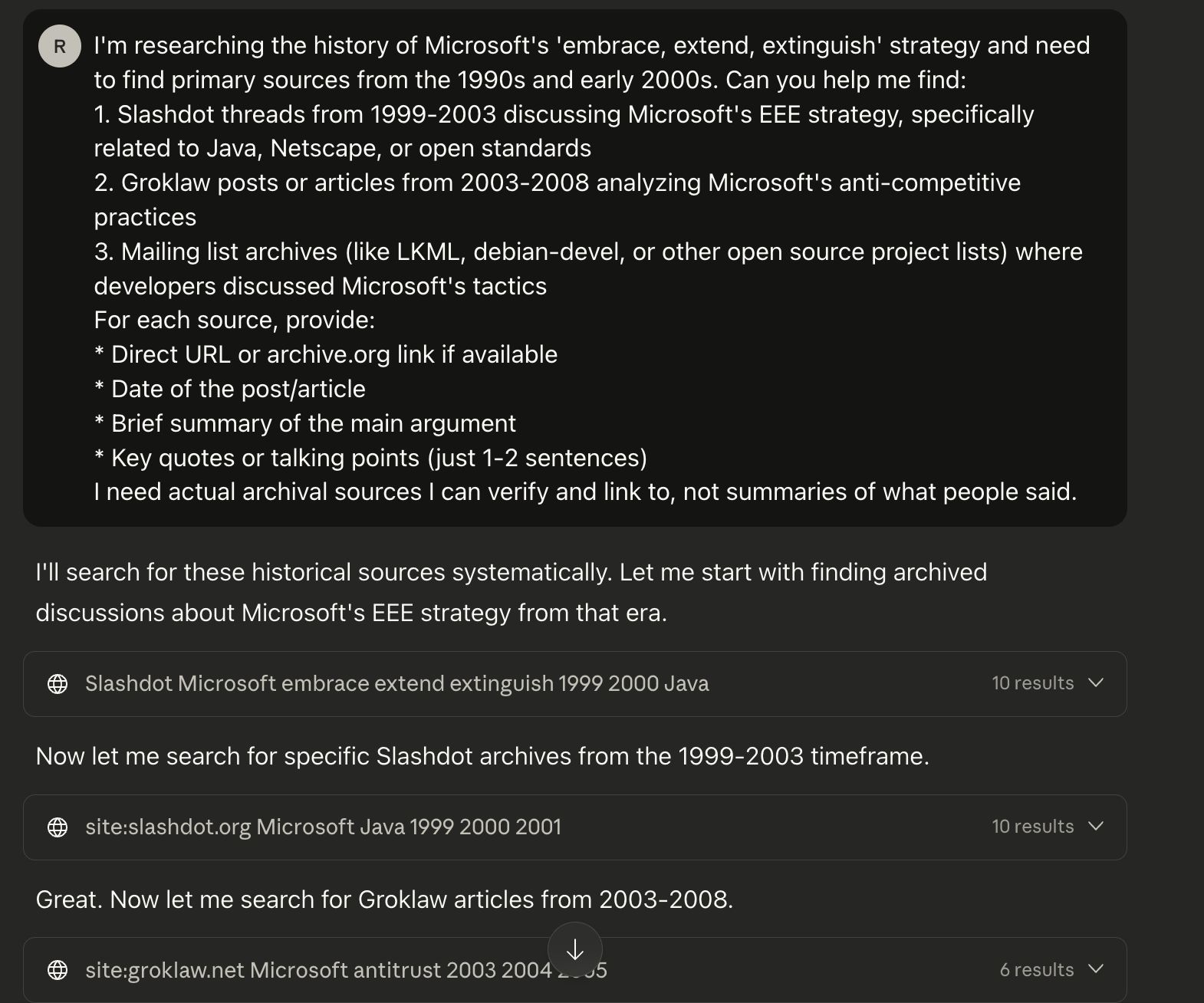

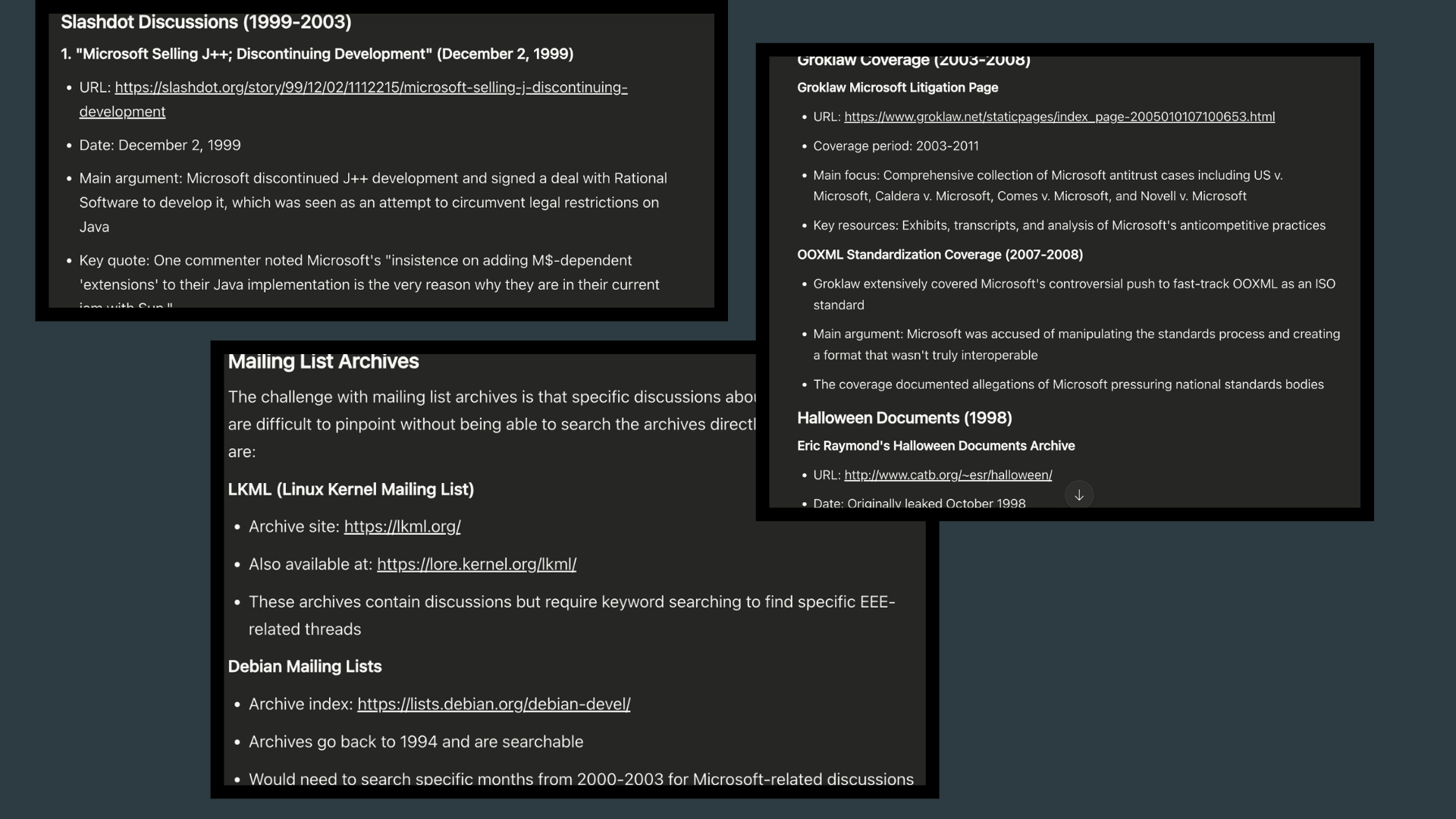

Finding obscure sources is something LLMs save the most time. You can describe what you're looking for in plain language and get pointed toward documentation, forum posts, or archived blogs you'd take a while to find through Google, often buried in the deep internet thanks to SEO and advertising/sponsorship links (which basically makes google a marketing agency rather than a search engine, but I'll talk about that in a different post). I asked an LLM to find early critiques of Microsoft's "embrace, extend, extinguish" strategy from the 90s. Got links to Slashdot threads, old Groklaw posts, and mailing list archives within minutes. Here's what that search pulled up - took 5 minutes instead of hours digging through dead links and archive sites.

Connecting dots across decades of tech history is very tedious work. We go from the Linux kernel development from 1991 to now, to BSD licensing wars, to The rise of GitHub, to Corporate open source washing.. LLMs can build timelines and show how events connect without you manually cross-referencing dates and sources. Again, you still verify everything, but the mapping it out happens faster.

Building arguments means understanding both sides. LLMs can summarize pro-corporate arguments without the emotions. I don't want to spend hours reading Microsoft's justifications for closed-source ecosystems, but I need to understand their position to argue against it. LLMs give me the Cliff Notes version so I can focus on their core beliefs, and making stronger arguments.

Real Example

I wanted to track how corporations shifted from "open source is cancer" to "we love open source" once they realized they could co-opt it. Asked an LLM to find statements from major tech CEOs about open source from 2000 to 2020.

We get Steve Ballmer calling Linux a cancer in 2001. Microsoft acquiring GitHub in 2018. Google's Android strategy. Apple's open source theater while locking down iOS. The timeline showed the exact moment corporations realized they could use open source for free labor while keeping control.

That research would've taken days. Took 30 minutes with an LLM doing the grunt work.

Second example. I needed to understand how proprietary software creates learned helplessness. LLM helped me find academic papers on software literacy, studies on user behavior in walled gardens, and anecdotal evidence from tech forums. Pulled sources from education research, psychology, and computer science.

The output gave me a reading list ranked by relevance. Saved me from reading 40 papers to find the 5 that mattered.

What This Isn't

This isn't about letting LLMs write your arguments. That's how you end up with soulless, generic takes that sound like your blogger neighbor from New York who just found out about ChatGPT. LLMs don't have opinions. They can't write with conviction because they don't believe anything.

You still need to read the sources. You still need to think. You still need to write in your own voice. LLMs just handle the information retrieval and initial organization.

People who use LLMs to replace thinking end up with weak arguments. People who use them to accelerate research show better results.

Practical Application

Pick a position you want to argue. Something technical where you need historical context and multiple sources. Ask an LLM to find primary sources, build timelines, and summarize opposing viewpoints.

Verify everything it gives you. Check the sources exist. Read the actual documents. Make sure the connections make sense.

Use what you learned to write your own argument. You assemble the pieces the LLM finds into something that matters.

For the open source research, I ended up with a 15-page document of sources, timelines, and key arguments. Took a few hours instead of a few weeks. The final writeup is still taking some time because writing can't be automated if you want it to be worth reading.

LLMs won't make you smarter. They'll make you faster if you're already smart enough to use them right. Most people aren't, which is why most "AI"-generated content reads like corporate slop.

Don't be most people.